BPMN 2.0 Constructs

This chapter covers the BPMN 20 constructs supported by Flowable, as well as custom extensions to the BPMN standard.

Custom extensions

The BPMN 2.0 standard is a good thing for all parties involved. End-users don’t suffer from vendor lock-in that comes from depending on a proprietary solution. Frameworks, and particularly open-source frameworks such as Flowable, can implement a solution that has the same (and often better implemented ;-) features as those of a big vendor. Thanks to the BPMN 2.0 standard, the transition from such a big vendor solution towards Flowable can be an easy and smooth path.

The downside of a standard, however, is the fact that it is always the result of many discussions and compromises between different companies (and often visions). As a developer reading the BPMN 2.0 XML of a process definition, sometimes it feels like certain constructs or ways to do things are very cumbersome. As Flowable puts ease of development as a top-priority, we introduced something called the 'Flowable BPMN extensions'. These 'extensions' are new constructs or ways to simplify certain constructs that are not part of the BPMN 2.0 specification.

Although the BPMN 2.0 specification clearly states that it was designed for custom extension, we make sure that:

There always must be a simple transformation to the standard way of doing things, as a prerequisite of such a custom extension. So when you decide to use a custom extension, you don’t have to be concerned that there is no way back.

When using a custom extension, it is always clearly indicated by giving the new XML element, attribute, and so on, the flowable: namespace prefix. Note that the Flowable engine also supports the activiti: namespace prefix.

Whether you want to use a custom extension or not is completely up to you. Several factors will influence this decision (graphical editor usage, company policy, and so on). We only provide them as we believe that some points in the standard can be done in a simpler or more efficient way. Feel free to give us (positive or negative) feedback on our extensions, or to post new ideas for custom extensions. Who knows, some day your idea might pop up in the specification!

Events

Events are used to model something that happens during the lifetime of a process. Events are always visualized as a circle. In BPMN 2.0, there exist two main event categories: catching and throwing events.

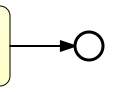

Catching: when process execution arrives at the event, it will wait for a trigger to happen. The type of trigger is defined by the inner icon or the type declaration in the XML. Catching events are visually differentiated from a throwing event by the inner icon that is not filled (it’s just white).

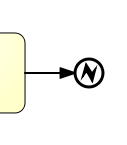

Throwing: when process execution arrives at the event, a trigger is fired. The type of trigger is defined by the inner icon or the type declaration in the XML. Throwing events are visually differentiated from a catching event by the inner icon that is filled with black.

Event Definitions

Event definitions define the semantics of an event. Without an event definition, an event "does nothing special". For instance, a start event without an event definition has nothing to specify what exactly starts the process. If we add an event definition to the start event (for example, a timer event definition), we declare what "type" of event starts the process (in the case of a timer event definition, the fact that a certain point in time is reached).

Timer Event Definitions

Timer events are events that are triggered by a defined timer. They can be used as start event, intermediate event or boundary event. The behavior of the time event depends on the business calendar used. Every timer event has a default business calendar, but the business calendar can also be given as part of the timer event definition.

<timerEventDefinition flowable:businessCalendarName="custom">

...

</timerEventDefinition>

Where businessCalendarName points to a business calendar in the process engine configuration. When business calendar is omitted, default business calendars are used.

The timer definition must have exactly one element from the following:

- timeDate. This format specifies a fixed date in ISO 8601 format, when trigger will be fired. For example:

<timerEventDefinition>

<timeDate>2011-03-11T12:13:14</timeDate>

</timerEventDefinition>

- timeDuration. To specify how long the timer should run before it is fired, a timeDuration can be specified as a sub-element of timerEventDefinition. The format used is the ISO 8601 format (as required by the BPMN 2.0 specification). For example (interval lasting 10 days):

<timerEventDefinition>

<timeDuration>P10D</timeDuration>

</timerEventDefinition>

- timeCycle. Specifies a repeating interval, which can be useful for starting process periodically, or for sending multiple reminders for overdue user task. A time cycle element can be in one of two formats. First, is the format of recurring time duration as specified by ISO 8601 standard. Example (3 repeating intervals, each lasting 10 hours):

It is also possible to specify the endDate as an optional attribute on the timeCycle or either in the end of the time expression as follows: R3/PT10H/${EndDate}. When the endDate is reached, the application will stop creating other jobs for this task. It accepts as a value either static values ISO 8601 standard, for example, "2015-02-25T16:42:11+00:00", or variables, for example, ${EndDate}

<timerEventDefinition>

<timeCycle flowable:endDate="2015-02-25T16:42:11+00:00">R3/PT10H</timeCycle>

</timerEventDefinition>

<timerEventDefinition>

<timeCycle>R3/PT10H/${EndDate}</timeCycle>

</timerEventDefinition>

If both are specified, then the endDate specified as attribute will be used by the system.

Currently, only the BoundaryTimerEvents and CatchTimerEvent support EndDate functionality.

Additionally, you can specify time cycle using cron expressions; the example below shows trigger firing every 5 minutes, starting at full hour:

0 0/5 * * * ?

Please see this tutorial for using cron expressions.

Note: The first symbol denotes seconds, not minutes as in normal Unix cron.

The recurring time duration is better suited for handling relative timers, which are calculated with respect to some particular point in time (for example, the time when a user task was started), while cron expressions can handle absolute timers, which is particularly useful for timer start events.

You can use expressions for timer event definitions, and by doing so, you can influence the timer definition

based on process variables. The process variables must contain the ISO 8601 (or cron for cycle type) string for appropriate timer type.

Additionally, for durations variables of type or expressions that return java.time.Duration can be used.

<boundaryEvent id="escalationTimer" cancelActivity="true" attachedToRef="firstLineSupport">

<timerEventDefinition>

<timeDuration>${duration}</timeDuration>

</timerEventDefinition>

</boundaryEvent>

Note: timers are only fired when the async executor is enabled (asyncExecutorActivate must be set to true in the flowable.cfg.xml, because the async executor is disabled by default).

Error Event Definitions

Important note: a BPMN error is NOT the same as a Java exception. In fact, the two have nothing in common. BPMN error events are a way of modeling business exceptions. Java exceptions are handled in their own specific way.

<endEvent id="myErrorEndEvent">

<errorEventDefinition errorRef="myError" />

</endEvent>

Signal Event Definitions

Signal events are events that reference a named signal. A signal is an event of global scope (broadcast semantics) and is delivered to all active handlers (waiting process instances/catching signal events).

A signal event definition is declared using the signalEventDefinition element. The attribute signalRef references a signal element declared as a child element of the definitions root element. The following is an excerpt of a process where a signal event is thrown and caught by intermediate events.

<definitions... >

<!-- declaration of the signal -->

<signal id="alertSignal" name="alert" />

<process id="catchSignal">

<intermediateThrowEvent id="throwSignalEvent" name="Alert">

<!-- signal event definition -->

<signalEventDefinition signalRef="alertSignal" />

</intermediateThrowEvent>

...

<intermediateCatchEvent id="catchSignalEvent" name="On Alert">

<!-- signal event definition -->

<signalEventDefinition signalRef="alertSignal" />

</intermediateCatchEvent>

...

</process>

</definitions>

The signalEventDefinitions reference the same signal element.

Throwing a Signal Event

A signal can either be thrown by a process instance using a BPMN construct or programmatically using the Java API. The following methods on the org.flowable.engine.RuntimeService can be used to throw a signal programmatically:

RuntimeService.signalEventReceived(String signalName);

RuntimeService.signalEventReceived(String signalName, String executionId);

The difference between signalEventReceived(String signalName) and signalEventReceived(String signalName, String executionId) is that the first method throws the signal globally to all subscribed handlers (broadcast semantics) and the second method delivers the signal to a specific execution only.

Catching a Signal Event

A signal event can be caught by an intermediate catch signal event or a signal boundary event.

Querying for Signal Event subscriptions

It’s possible to query for all executions that have subscribed to a specific signal event:

List<Execution> executions = runtimeService.createExecutionQuery()

.signalEventSubscriptionName("alert")

.list();

We can then use the signalEventReceived(String signalName, String executionId) method to deliver the signal to these executions.

Signal event scope

By default, signals are broadcast process engine wide. This means that you can throw a signal event in a process instance, and other process instances with different process definitions can react on the occurrence of this event.

However, sometimes it is desirable to react to a signal event only within the same process instance. A use case, for example, is a synchronization mechanism in the process instance when two or more activities are mutually exclusive.

To restrict the scope of the signal event, add the (non-BPMN 2.0 standard!) scope attribute to the signal event definition:

<signal id="alertSignal" name="alert" flowable:scope="processInstance"/>

The default value for this is attribute is "global".

Signal Event example(s)

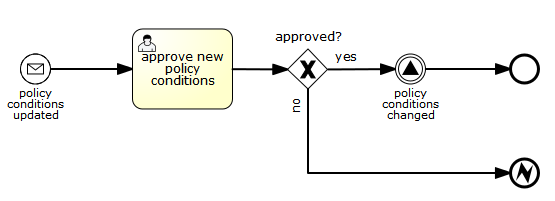

The following is an example of two separate processes communicating using signals. The first process is started if an insurance policy is updated or changed. After the changes have been reviewed by a human participant, a signal event is thrown, signaling that a policy has changed:

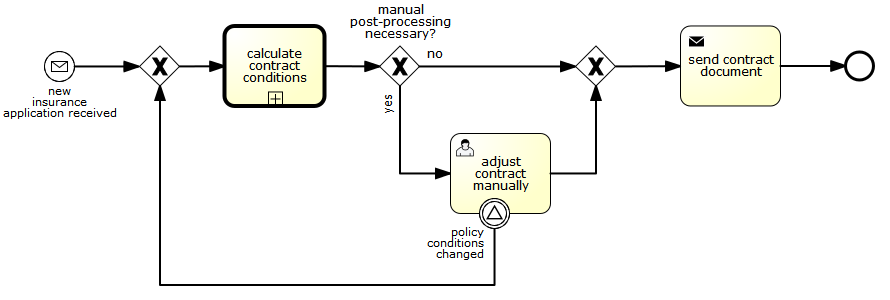

This event can now be caught by all process instances that are interested. The following is an example of a process subscribing to the event.

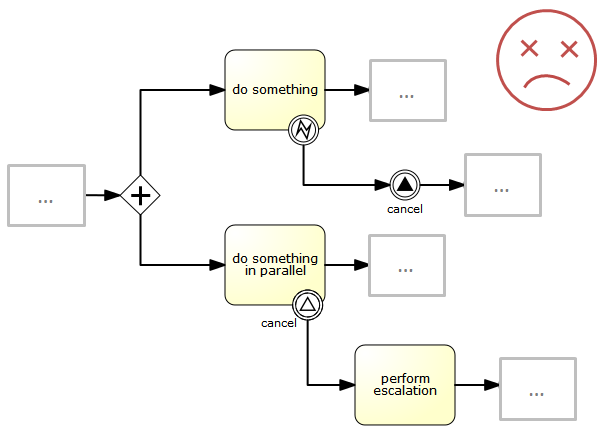

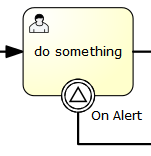

Note: it’s important to understand that a signal event is broadcast to all active handlers. This means, in the case of the example given above, that all instances of the process catching the signal will receive the event. In this scenario, this is what we want. However, there are also situations where the broadcast behavior is unintended. Consider the following process:

The pattern described in the process above is not supported by BPMN. The idea is that the error thrown while performing the "do something" task is caught by the boundary error event, propagated to the parallel path of execution using the signal throw event and then interrupt the "do something in parallel" task. So far, Flowable would perform as expected. The signal would be propagated to the catching boundary event and interrupt the task. However, due to the broadcast semantics of the signal, it would also be propagated to all other process instances that have subscribed to the signal event. In this case, this might not be what we want.

Note: the signal event does not perform any kind of correlation to a specific process instance. On the contrary, it is broadcast to all process instances. If you need to deliver a signal to a specific process instance only, perform the correlation manually and use signalEventReceived(String signalName, String executionId) along with the appropriate query mechanisms.

Flowable does have a way to fix this by adding the scope attribute to the signal event set to processInstance.

Message Event Definitions

Message events are events that reference a named message. A message has a name and a payload. Unlike a signal, a message event is always directed at a single receiver.

A message event definition is declared using the messageEventDefinition element. The attribute messageRef references a message element declared as a child element of the definitions root element. The following is an excerpt of a process where two message events is declared and referenced by a start event and an intermediate catching message event.

<definitions id="definitions"

xmlns="http://www.omg.org/spec/BPMN/20100524/MODEL"

xmlns:flowable="http://flowable.org/bpmn"

targetNamespace="Examples"

xmlns:tns="Examples">

<message id="newInvoice" name="newInvoiceMessage" />

<message id="payment" name="paymentMessage" />

<process id="invoiceProcess">

<startEvent id="messageStart" >

<messageEventDefinition messageRef="newInvoice" />

</startEvent>

...

<intermediateCatchEvent id="paymentEvt" >

<messageEventDefinition messageRef="payment" />

</intermediateCatchEvent>

...

</process>

</definitions>

Throwing a Message Event

As an embeddable process engine, Flowable is not concerned with actually receiving a message. This would be environment dependent and entail platform-specific activities, such as connecting to a JMS (Java Messaging Service) Queue/Topic or processing a Webservice or REST request. The reception of messages is therefore something you have to implement as part of the application or infrastructure into which the process engine is embedded.

After you have received a message inside your application, you must decide what to do with it. If the message should trigger the start of a new process instance, choose between the following methods offered by the runtime service:

ProcessInstance startProcessInstanceByMessage(String messageName);

ProcessInstance startProcessInstanceByMessage(String messageName, Map<String, Object> processVariables);

ProcessInstance startProcessInstanceByMessage(String messageName, String businessKey,

Map<String, Object> processVariables);

These methods start a process instance using the referenced message.

If the message needs to be received by an existing process instance, you first have to correlate the message to a specific process instance (see next section) and then trigger the continuation of the waiting execution. The runtime service offers the following methods for triggering an execution based on a message event subscription:

void messageEventReceived(String messageName, String executionId);

void messageEventReceived(String messageName, String executionId, HashMap<String, Object> processVariables);

Querying for Message Event subscriptions

- In the case of a message start event, the message event subscription is associated with a particular process definition. Such message subscriptions can be queried using a ProcessDefinitionQuery:

ProcessDefinition processDefinition = repositoryService.createProcessDefinitionQuery()

.messageEventSubscription("newCallCenterBooking")

.singleResult();

Since there can only be one process definition for a specific message subscription, the query always returns zero or one result. If a process definition is updated, only the newest version of the process definition has a subscription to the message event.

- In the case of an intermediate catch message event, the message event subscription is associated with a particular execution. Such message event subscriptions can be queried using a ExecutionQuery:

Execution execution = runtimeService.createExecutionQuery()

.messageEventSubscriptionName("paymentReceived")

.variableValueEquals("orderId", message.getOrderId())

.singleResult();

Such queries are called correlation queries and usually require knowledge about the processes (in this case, that there will be at most one process instance for a given orderId).

Message Event example(s)

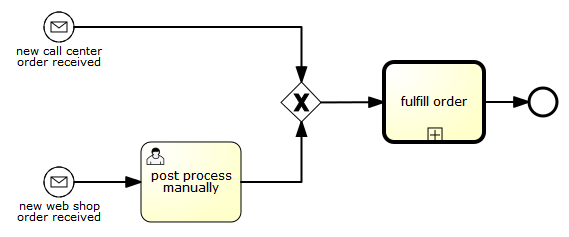

The following is an example of a process that can be started using two different messages:

This is useful if the process needs alternative ways to react to different start events, but eventually continues in a uniform way.

Start Events

A start event indicates where a process starts. The type of start event (process starts on arrival of message, on specific time intervals, and so on), defining how the process is started, is shown as a small icon in the visual representation of the event. In the XML representation, the type is given by the declaration of a sub-element.

Start events are always catching: conceptually the event is (at any time) waiting until a certain trigger happens.

In a start event, the following Flowable-specific properties can be specified:

- initiator: identifies the variable name in which the authenticated user ID will be stored when the process is started. For example:

<startEvent id="request" flowable:initiator="initiator" />

The authenticated user must be set with the method IdentityService.setAuthenticatedUserId(String) in a try-finally block, like this:

try {

identityService.setAuthenticatedUserId("bono");

runtimeService.startProcessInstanceByKey("someProcessKey");

} finally {

identityService.setAuthenticatedUserId(null);

}

This code is baked into the Flowable application, so it works in combination with ???.

None Start Event

Description

A 'none' start event technically means that the trigger for starting the process instance is unspecified. This means that the engine cannot anticipate when the process instance must be started. The none start event is used when the process instance is started through the API by calling one of the startProcessInstanceByXXX methods.

ProcessInstance processInstance = runtimeService.startProcessInstanceByXXX();

Note: a sub-process always has a none start event.

Graphical notation

A none start event is visualized as a circle with no inner icon (in other words, no trigger type).

XML representation

The XML representation of a none start event is the normal start event declaration without any sub-element (other start event types all have a sub-element declaring the type).

<startEvent id="start" name="my start event" />

Custom extensions for the none start event

formKey: references a form definition that users have to fill in when starting a new process instance. More information can be found in the forms section Example:

<startEvent id="request" flowable:formKey="request" />

Timer Start Event

Description

A timer start event is used to create process instances at given time. It can be used both for processes that should start only once and for processes that should start in specific time intervals.

Note: a sub-process cannot have a timer start event.

Note: a start timer event is scheduled as soon as process is deployed. There is no need to call startProcessInstanceByXXX, although calling start process methods is not restricted and will cause one more starting of the process at the time of startProcessInstanceByXXX invocation.

Note: when a new version of a process with a start timer event is deployed, the job corresponding with the previous timer will be removed. The reasoning is that normally it is not desirable to keep automatically starting new process instances of the old version of the process.

Graphical notation

A timer start event is visualized as a circle with clock inner icon.

XML representation

The XML representation of a timer start event is the normal start event declaration, with timer definition sub-element. Please refer to timer definitions for configuration details.

Example: process will start 4 times, in 5 minute intervals, starting on 11th march 2011, 12:13

<startEvent id="theStart">

<timerEventDefinition>

<timeCycle>R4/2011-03-11T12:13/PT5M</timeCycle>

</timerEventDefinition>

</startEvent>

Example: process will start once, on selected date

<startEvent id="theStart">

<timerEventDefinition>

<timeDate>2011-03-11T12:13:14</timeDate>

</timerEventDefinition>

</startEvent>

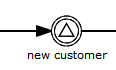

Message Start Event

Description

A message start event can be used to start a process instance using a named message. This effectively allows us to select the right start event from a set of alternative start events using the message name.

When deploying a process definition with one or more message start events, the following considerations apply:

The name of the message start event must be unique across a given process definition. A process definition must not have multiple message start events with the same name. Flowable throws an exception upon deployment of a process definition containing two or more message start events referencing the same message, or if two or more message start events reference messages with the same message name.

The name of the message start event must be unique across all deployed process definitions. Flowable throws an exception upon deployment of a process definition containing one or more message start events referencing a message with the same name as a message start event already deployed by a different process definition.

Process versioning: Upon deployment of a new version of a process definition, the start message subscriptions of the previous version are removed.

When starting a process instance, a message start event can be triggered using the following methods on the RuntimeService:

ProcessInstance startProcessInstanceByMessage(String messageName);

ProcessInstance startProcessInstanceByMessage(String messageName, Map<String, Object> processVariables);

ProcessInstance startProcessInstanceByMessage(String messageName, String businessKey,

Map<String, Object< processVariables);

The messageName is the name given in the name attribute of the message element referenced by the messageRef attribute of the messageEventDefinition. The following considerations apply when starting a process instance:

Message start events are only supported on top-level processes. Message start events are not supported on embedded sub processes.

If a process definition has multiple message start events, runtimeService.startProcessInstanceByMessage(...) allows to select the appropriate start event.

If a process definition has multiple message start events and a single none start event, runtimeService.startProcessInstanceByKey(...) and runtimeService.startProcessInstanceById(...) starts a process instance using the none start event.

If a process definition has multiple message start events and no none start event, runtimeService.startProcessInstanceByKey(...) and runtimeService.startProcessInstanceById(...) throw an exception.

If a process definition has a single message start event, runtimeService.startProcessInstanceByKey(...) and runtimeService.startProcessInstanceById(...) start a new process instance using the message start event.

If a process is started from a call activity, message start event(s) are only supported if

in addition to the message start event(s), the process has a single none start event

the process has a single message start event and no other start events.

Graphical notation

A message start event is visualized as a circle with a message event symbol. The symbol is unfilled, to represent the catching (receiving) behavior.

XML representation

The XML representation of a message start event is the normal start event declaration with a messageEventDefinition child-element:

<definitions id="definitions"

xmlns="http://www.omg.org/spec/BPMN/20100524/MODEL"

xmlns:flowable="http://flowable.org/bpmn"

targetNamespace="Examples"

xmlns:tns="Examples">

<message id="newInvoice" name="newInvoiceMessage" />

<process id="invoiceProcess">

<startEvent id="messageStart" >

<messageEventDefinition messageRef="tns:newInvoice" />

</startEvent>

...

</process>

</definitions>

Signal Start Event

Description

A signal start event can be used to start a process instance using a named signal. The signal can be 'fired' from within a process instance using the intermediary signal throw event or through the API (runtimeService.signalEventReceivedXXX methods). In both cases, all process definitions that have a signal start event with the same name will be started.

Note that in both cases, it is also possible to choose between a synchronous and asynchronous starting of the process instances.

The signalName that must be passed in the API is the name given in the name attribute of the signal element referenced by the signalRef attribute of the signalEventDefinition.

Graphical notation

A signal start event is visualized as a circle with a signal event symbol. The symbol is unfilled, to represent the catching (receiving) behavior.

XML representation

The XML representation of a signal start event is the normal start event declaration with a signalEventDefinition child-element:

<signal id="theSignal" name="The Signal" />

<process id="processWithSignalStart1">

<startEvent id="theStart">

<signalEventDefinition id="theSignalEventDefinition" signalRef="theSignal" />

</startEvent>

<sequenceFlow id="flow1" sourceRef="theStart" targetRef="theTask" />

<userTask id="theTask" name="Task in process A" />

<sequenceFlow id="flow2" sourceRef="theTask" targetRef="theEnd" />

<endEvent id="theEnd" />

</process>

Error Start Event

Description

An error start event can be used to trigger an Event Sub-Process. An error start event cannot be used for starting a process instance.

An error start event is always interrupting.

Graphical notation

An error start event is visualized as a circle with an error event symbol. The symbol is unfilled, to represent the catching (receiving) behavior.

XML representation

The XML representation of an error start event is the normal start event declaration with an errorEventDefinition child-element:

<startEvent id="messageStart" >

<errorEventDefinition errorRef="someError" />

</startEvent>

End Events

An end event signifies the end of a path in a process or sub-process. An end event is always throwing. This means that when process execution arrives at an end event, a result is thrown. The type of result is depicted by the inner black icon of the event. In the XML representation, the type is given by the declaration of a sub-element.

None End Event

Description

A 'none' end event means that the result thrown when the event is reached is unspecified. As such, the engine will not do anything extra besides ending the current path of execution.

Graphical notation

A none end event is visualized as a circle with a thick border with no inner icon (no result type).

XML representation

The XML representation of a none end event is the normal end event declaration, without any sub-element (other end event types all have a sub-element declaring the type).

<endEvent id="end" name="my end event" />

Error End Event

Description

When process execution arrives at an error end event, the current path of execution ends and an error is thrown. This error can caught by a matching intermediate boundary error event. If no matching boundary error event is found, an exception will be thrown.

Graphical notation

An error end event is visualized as a typical end event (circle with thick border), with the error icon inside. The error icon is completely black, to indicate its throwing semantics.

XML representation

An error end event is represented as an end event, with an errorEventDefinition child element.

<endEvent id="myErrorEndEvent">

<errorEventDefinition errorRef="myError" />

</endEvent>

The errorRef attribute can reference an error element that is defined outside the process:

<error id="myError" errorCode="123" />

...

<process id="myProcess">

...

The errorCode of the error will be used to find the matching catching boundary error event. If the errorRef doesn’t match any defined error, then the errorRef is used as a shortcut for the errorCode. This is a Flowable specific shortcut. More concretely, the following snippets are equivalent in functionality.

<error id="myError" errorCode="error123" />

...

<process id="myProcess">

...

<endEvent id="myErrorEndEvent">

<errorEventDefinition errorRef="myError" />

</endEvent>

...

is equivalent with

<endEvent id="myErrorEndEvent">

<errorEventDefinition errorRef="error123" />

</endEvent>

Note that the errorRef must comply with the BPMN 2.0 schema, and must be a valid QName.

Terminate End Event

Description

When a terminate end event is reached, the current process instance or sub-process will be terminated. Conceptually, when an execution arrives at a terminate end event, the first scope (process or sub-process) will be determined and ended. Note that in BPMN 2.0, a sub-process can be an embedded sub-process, call activity, event sub-process or transaction sub-process. This rule applies in general: when, for example, there is a multi-instance call activity or embedded sub-process, only that instance will end, the other instances and the process instance are not affected.

There is an optional attribute terminateAll that can be added. When true, regardless of the placement of the terminate end event in the process definition and regardless of being in a sub-process (even nested), the (root) process instance will be terminated.

Graphical notation

A cancel end event visualized as a typical end event (circle with thick outline), with a full black circle inside.

XML representation

A terminate end event is represented as an end event, with a terminateEventDefinition child element.

Note that the terminateAll attribute is optional (and false by default).

<endEvent id="myEndEvent >

<terminateEventDefinition flowable:terminateAll="true"></terminateEventDefinition>

</endEvent>

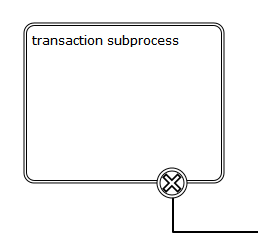

Cancel End Event

Description

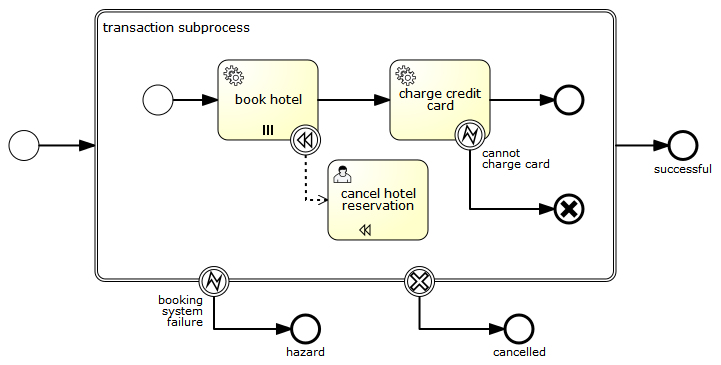

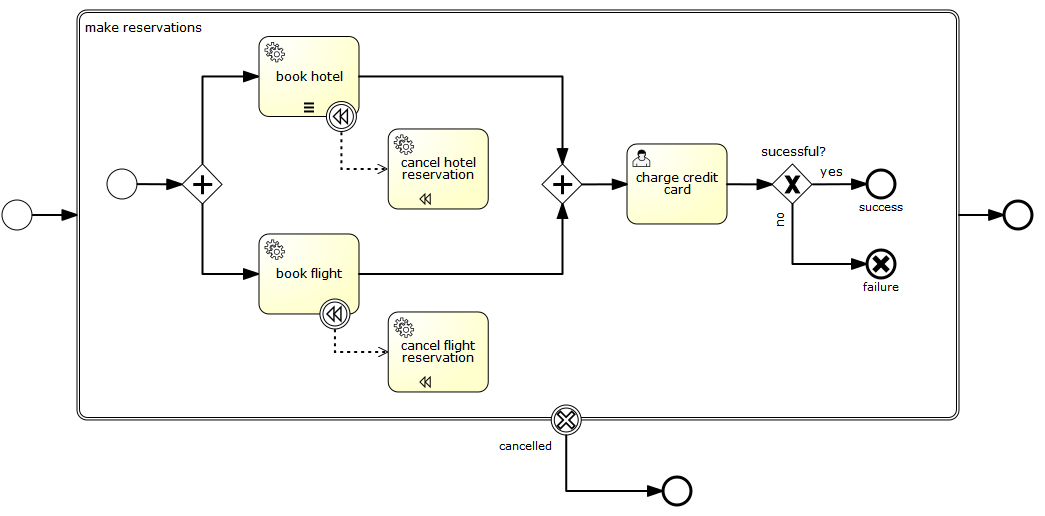

The cancel end event can only be used in combination with a BPMN transaction sub-process. When the cancel end event is reached, a cancel event is thrown which must be caught by a cancel boundary event. The cancel boundary event then cancels the transaction and triggers compensation.

Graphical notation

A cancel end event is visualized as a typical end event (circle with thick outline), with the cancel icon inside. The cancel icon is completely black, to indicate its throwing semantics.

XML representation

A cancel end event is represented as an end event, with a cancelEventDefinition child element.

<endEvent id="myCancelEndEvent">

<cancelEventDefinition />

</endEvent>

Boundary Events

Boundary events are catching events that are attached to an activity (a boundary event can never be throwing). This means that while the activity is running, the event is listening for a certain type of trigger. When the event is caught, the activity is interrupted and the sequence flow going out of the event is followed.

All boundary events are defined in the same way:

<boundaryEvent id="myBoundaryEvent" attachedToRef="theActivity">

<XXXEventDefinition/>

</boundaryEvent>

A boundary event is defined with

A unique identifier (process-wide)

A reference to the activity to which the event is attached through the attachedToRef attribute. Note that a boundary event is defined on the same level as the activities to which they are attached (in other words, no inclusion of the boundary event inside the activity).

An XML sub-element of the form XXXEventDefinition (for example, TimerEventDefinition, ErrorEventDefinition, and so on) defining the type of the boundary event. See the specific boundary event types for more details.

Timer Boundary Event

Description

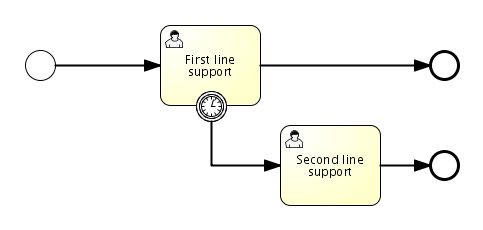

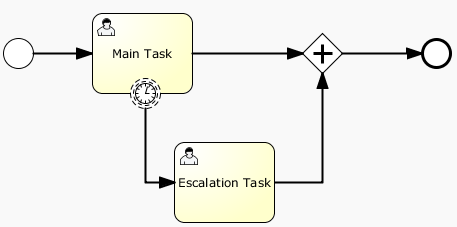

A timer boundary event acts as a stopwatch and alarm clock. When an execution arrives at the activity where the boundary event is attached, a timer is started. When the timer fires (for example, after a specified interval), the activity is interrupted and the sequence flow going out of the boundary event is followed.

Graphical Notation

A timer boundary event is visualized as a typical boundary event (circle on the border), with the timer icon on the inside.

XML Representation

A timer boundary event is defined as a regular boundary event. The specific type sub-element in this case is a timerEventDefinition element.

<boundaryEvent id="escalationTimer" cancelActivity="true" attachedToRef="firstLineSupport">

<timerEventDefinition>

<timeDuration>PT4H</timeDuration>

</timerEventDefinition>

</boundaryEvent>

Please refer to timer event definition for details on timer configuration.

In the graphical representation, the line of the circle is dotted as you can see in the example above:

A typical use case is sending an escalation email after a period of time, but without affecting the normal process flow.

There is a key difference between the interrupting and non interrupting timer event. Non-interrupting means the original activity is not interrupted but stays as it was. The interrupting behavior is the default. In the XML representation, the cancelActivity attribute is set to false:

<boundaryEvent id="escalationTimer" cancelActivity="false" attachedToRef="firstLineSupport"/>

Note: boundary timer events are only fired when the async executor is enabled (asyncExecutorActivate needs to be set to true in the flowable.cfg.xml, since the async executor is disabled by default).

Known issue with boundary events

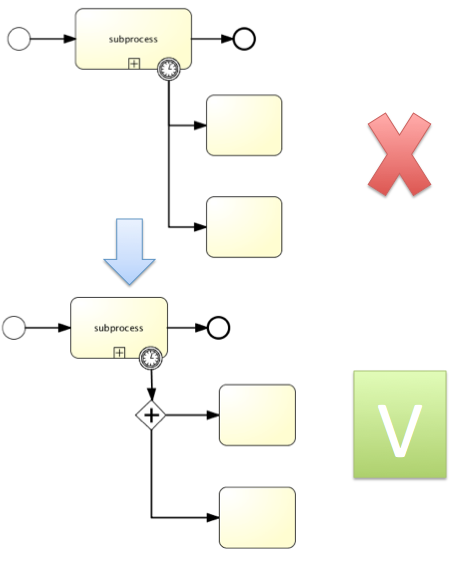

There is a known issue regarding concurrency when using boundary events of any type. Currently, it is not possible to have multiple outgoing sequence flows attached to a boundary event. A solution to this problem is to use one outgoing sequence flow that goes to a parallel gateway.

Error Boundary Event

Description

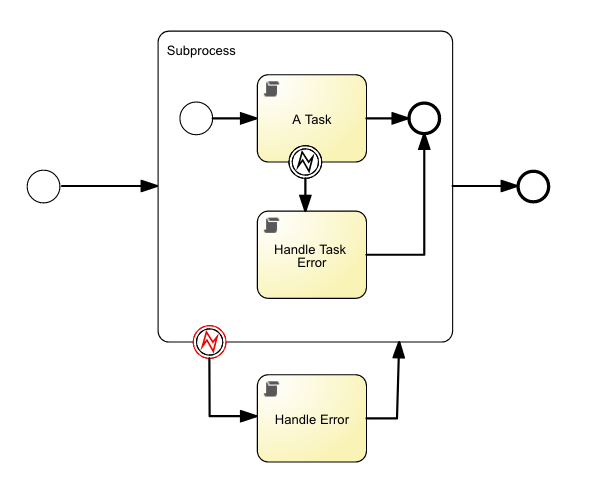

An intermediate catching error on the boundary of an activity, or boundary error event for short, catches errors that are thrown within the scope of the activity on which it is defined.

Defining a boundary error event makes most sense on an embedded sub-process, or a call activity, as a sub-process creates a scope for all activities inside the sub-process. Errors are thrown by error end events. Such an error will propagate its parent scopes upwards until a scope is found on which a boundary error event is defined that matches the error event definition.

When an error event is caught, the activity on which the boundary event is defined is destroyed, also destroying all current executions within (concurrent activities, nested sub-processes, and so on). Process execution continues following the outgoing sequence flow of the boundary event.

Graphical notation

A boundary error event is visualized as a typical intermediate event (circle with smaller circle inside) on the boundary, with the error icon inside. The error icon is white, to indicate its catch semantics.

XML representation

A boundary error event is defined as a typical boundary event:

<boundaryEvent id="catchError" attachedToRef="mySubProcess">

<errorEventDefinition errorRef="myError"/>

</boundaryEvent>

As with the error end event, the errorRef references an error defined outside the process element:

<error id="myError" errorCode="123" />

...

<process id="myProcess">

...

The errorCode is used to match the errors that are caught:

If errorRef is omitted, the boundary error event will catch any error event, regardless of the errorCode of the error.

If an errorRef is provided and it references an existing error, the boundary event will only catch errors with the same error code.

If an errorRef is provided, but no error is defined in the BPMN 2.0 file, then the errorRef is used as errorCode (similar for with error end events).

Example

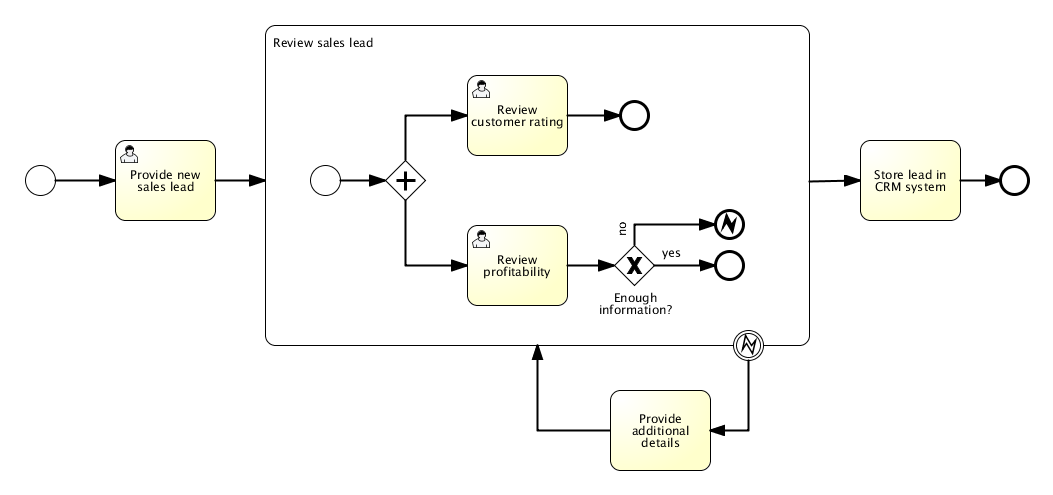

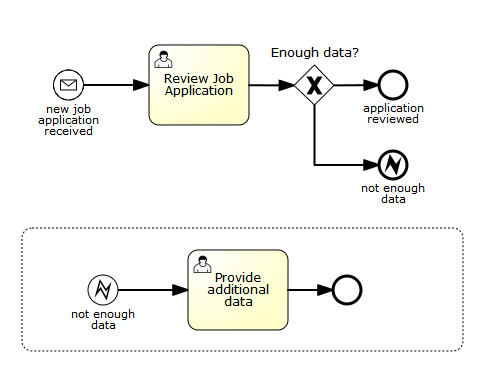

The following example process shows how an error end event can be used. When the 'Review profitability' user task is completed by saying that not enough information is provided, an error is thrown. When this error is caught on the boundary of the sub-process, all active activities within the 'Review sales lead' sub-process are destroyed (even if 'Review customer rating' had not yet been completed), and the 'Provide additional details' user task is created.

This process is shipped as example in the demo setup. The process XML and unit test can be found in the org.flowable.examples.bpmn.event.error package.

Signal Boundary Event

Description

An attached intermediate catching signal on the boundary of an activity, or boundary signal event for short, catches signals with the same signal name as the referenced signal definition.

Note: contrary to other events, such as the boundary error event, a boundary signal event doesn’t only catch signal events thrown from the scope to which it is attached. On the contrary, a signal event has global scope (broadcast semantics), meaning that the signal can be thrown from any place, even from a different process instance.

Note: contrary to other events, such as the error event, a signal is not consumed if it is caught. If you have two active signal boundary events catching the same signal event, both boundary events are triggered, even if they are part of different process instances.

Graphical notation

A boundary signal event is visualized as a typical intermediate event (circle with smaller circle inside) on the boundary, with the signal icon inside. The signal icon is white (unfilled), to indicate its catch semantics.

XML representation

A boundary signal event is defined as a typical boundary event:

<boundaryEvent id="boundary" attachedToRef="task" cancelActivity="true">

<signalEventDefinition signalRef="alertSignal"/>

</boundaryEvent>

Example

See the section on signal event definitions.

Message Boundary Event

Description

An attached intermediate catching message on the boundary of an activity, or boundary message event for short, catches messages with the same message name as the referenced message definition.

Graphical notation

A boundary message event is visualized as a typical intermediate event (circle with smaller circle inside) on the boundary, with the message icon inside. The message icon is white (unfilled), to indicate its catch semantics.

Note that boundary message event can be both interrupting (right-hand side) and non-interrupting (left-hand side).

XML representation

A boundary message event is defined as a typical boundary event:

<boundaryEvent id="boundary" attachedToRef="task" cancelActivity="true">

<messageEventDefinition messageRef="newCustomerMessage"/>

</boundaryEvent>

Example

See the section on message event definitions.

Cancel Boundary Event

Description

An attached intermediate catching cancel event on the boundary of a transaction sub-process, or boundary cancel event for short, is triggered when a transaction is canceled. When the cancel boundary event is triggered, it first interrupts all active executions in the current scope. Next, it starts compensation for all active compensation boundary events in the scope of the transaction. Compensation is performed synchronously, in other words, the boundary event waits before compensation is completed before leaving the transaction. When compensation is completed, the transaction sub-process is left using any sequence flows running out of the cancel boundary event.

Note: Only a single cancel boundary event is allowed for a transaction sub-process.

Note: If the transaction sub-process hosts nested sub-processes, compensation is only triggered for sub-processes that have completed successfully.

Note: If a cancel boundary event is placed on a transaction sub-process with multi instance characteristics, if one instance triggers cancellation, the boundary event cancels all instances.

Graphical notation

A cancel boundary event is visualized as a typical intermediate event (circle with smaller circle inside) on the boundary, with the cancel icon inside. The cancel icon is white (unfilled), to indicate its catching semantics.

XML representation

A cancel boundary event is defined as a typical boundary event:

<boundaryEvent id="boundary" attachedToRef="transaction" >

<cancelEventDefinition />

</boundaryEvent>

As the cancel boundary event is always interrupting, the cancelActivity attribute is not required.

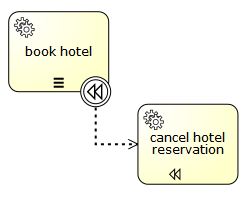

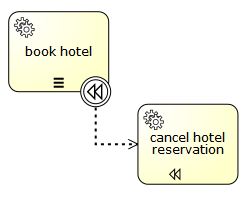

Compensation Boundary Event

Description

An attached intermediate catching compensation on the boundary of an activity or compensation boundary event for short, can be used to attach a compensation handler to an activity.

The compensation boundary event must reference a single compensation handler using a directed association.

A compensation boundary event has a different activation policy from other boundary events. Other boundary events, such as the signal boundary event, are activated when the activity they are attached to is started. When the activity is finished, they are deactivated and the corresponding event subscription is canceled. The compensation boundary event is different. The compensation boundary event is activated when the activity it is attached to completes successfully. At this point, the corresponding subscription to the compensation events is created. The subscription is removed either when a compensation event is triggered or when the corresponding process instance ends. From this, it follows:

When compensation is triggered, the compensation handler associated with the compensation boundary event is invoked the same number of times the activity it is attached to completed successfully.

If a compensation boundary event is attached to an activity with multiple instance characteristics, a compensation event subscription is created for each instance.

If a compensation boundary event is attached to an activity that is contained inside a loop, a compensation event subscription is created each time the activity is executed.

If the process instance ends, the subscriptions to compensation events are canceled.

Note: the compensation boundary event is not supported on embedded sub-processes.

Graphical notation

A compensation boundary event is visualized as a typical intermediate event (circle with smaller circle inside) on the boundary, with the compensation icon inside. The compensation icon is white (unfilled), to indicate its catching semantics. In addition to a compensation boundary event, the following figure shows a compensation handler associated with the boundary event using a unidirectional association:

XML representation

A compensation boundary event is defined as a typical boundary event:

<boundaryEvent id="compensateBookHotelEvt" attachedToRef="bookHotel" >

<compensateEventDefinition />

</boundaryEvent>

<association associationDirection="One" id="a1"

sourceRef="compensateBookHotelEvt" targetRef="undoBookHotel" />

<serviceTask id="undoBookHotel" isForCompensation="true" flowable:class="..." />

As the compensation boundary event is activated after the activity has completed successfully, the cancelActivity attribute is not supported.

Intermediate Catching Events

All intermediate catching events are defined in the same way:

<intermediateCatchEvent id="myIntermediateCatchEvent" >

<XXXEventDefinition/>

</intermediateCatchEvent>

An intermediate catching event is defined with:

A unique identifier (process-wide)

An XML sub-element of the form XXXEventDefinition (for example, TimerEventDefinition) defining the type of the intermediate catching event. See the specific catching event types for more details.

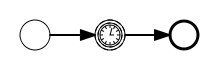

Timer Intermediate Catching Event

Description

A timer intermediate event acts as a stopwatch. When an execution arrives at a catching event activity, a timer is started. When the timer fires (for example, after a specified interval), the sequence flow going out of the timer intermediate event is followed.

Graphical Notation

A timer intermediate event is visualized as an intermediate catching event, with the timer icon on the inside.

XML Representation

A timer intermediate event is defined as an intermediate catching event. The specific type sub-element is, in this case, a timerEventDefinition element.

<intermediateCatchEvent id="timer">

<timerEventDefinition>

<timeDuration>PT5M</timeDuration>

</timerEventDefinition>

</intermediateCatchEvent>

See timer event definitions for configuration details.

Signal Intermediate Catching Event

Description

An intermediate catching signal event catches signals with the same signal name as the referenced signal definition.

Note: contrary to other events, such as an error event, a signal is not consumed if it is caught. If you have two active signal boundary events catching the same signal event, both boundary events are triggered, even if they are part of different process instances.

Graphical notation

An intermediate signal catch event is visualized as a typical intermediate event (circle with smaller circle inside), with the signal icon inside. The signal icon is white (unfilled), to indicate its catch semantics.

XML representation

A signal intermediate event is defined as an intermediate catching event. The specific type sub-element is in this case a signalEventDefinition element.

<intermediateCatchEvent id="signal">

<signalEventDefinition signalRef="newCustomerSignal" />

</intermediateCatchEvent>

Example

See the section on signal event definitions.

Message Intermediate Catching Event

Description

An intermediate catching message event catches messages with a specified name.

Graphical notation

An intermediate catching message event is visualized as a typical intermediate event (circle with smaller circle inside), with the message icon inside. The message icon is white (unfilled), to indicate its catch semantics.

XML representation

A message intermediate event is defined as an intermediate catching event. The specific type sub-element is in this case a messageEventDefinition element.

<intermediateCatchEvent id="message">

<messageEventDefinition signalRef="newCustomerMessage" />

</intermediateCatchEvent>

Example

See the section on message event definitions.

Intermediate Throwing Event

All intermediate throwing events are defined in the same way:

<intermediateThrowEvent id="myIntermediateThrowEvent" >

<XXXEventDefinition/>

</intermediateThrowEvent>

An intermediate throwing event is defined with:

A unique identifier (process-wide)

An XML sub-element of the form XXXEventDefinition (for example, signalEventDefinition) defining the type of the intermediate throwing event. See the specific throwing event types for more details.

Intermediate Throwing None Event

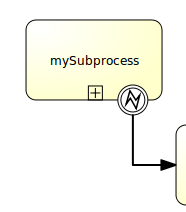

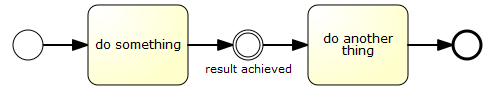

The following process diagram shows a simple example of an intermediate none event, which is often used to indicate some state achieved in the process.

This can be a good hook to monitor some KPIs, by adding an execution listener.

<intermediateThrowEvent id="noneEvent">

<extensionElements>

<flowable:executionListener class="org.flowable.engine.test.bpmn.event.IntermediateNoneEventTest$MyExecutionListener" event="start" />

</extensionElements>

</intermediateThrowEvent>

Here you can add some of your own code to maybe send some event to your BAM tool or DWH. The engine itself doesn’t do anything in that case, it just passes through.

Signal Intermediate Throwing Event

Description

An intermediate throwing signal event throws a signal event for a defined signal.

In Flowable, the signal is broadcast to all active handlers (in other words, all catching signal events). Signals can be published synchronously or asynchronously.

In the default configuration, the signal is delivered synchronously. This means that the throwing process instance waits until the signal is delivered to all catching process instances. The catching process instances are also notified in the same transaction as the throwing process instance, which means that if one of the notified instances produces a technical error (throws an exception), all involved instances fail.

A signal can also be delivered asynchronously. In this case it is determined which handlers are active at the time the throwing signal event is reached. For each active handler, an asynchronous notification message (Job) is stored and delivered by the JobExecutor.

Graphical notation

An intermediate signal throw event is visualized as a typical intermediate event (circle with smaller circle inside), with the signal icon inside. The signal icon is black (filled), to indicate its throw semantics.

XML representation

A signal intermediate event is defined as an intermediate throwing event. The specific type sub-element is in this case a signalEventDefinition element.

<intermediateThrowEvent id="signal">

<signalEventDefinition signalRef="newCustomerSignal" />

</intermediateThrowEvent>

An asynchronous signal event would look like this:

<intermediateThrowEvent id="signal">

<signalEventDefinition signalRef="newCustomerSignal" flowable:async="true" />

</intermediateThrowEvent>

Example

See the section on signal event definitions.

Compensation Intermediate Throwing Event

Description

An intermediate throwing compensation event can be used to trigger compensation.

Triggering compensation: Compensation can either be triggered for a designated activity or for the scope that hosts the compensation event. Compensation is performed through execution of the compensation handler associated with an activity.

When compensation is thrown for an activity, the associated compensation handler is executed the same number of times the activity completed successfully.

If compensation is thrown for the current scope, all activities within the current scope are compensated, which includes activities on concurrent branches.

Compensation is triggered hierarchically: if the activity to be compensated is a sub-process, compensation is triggered for all activities contained in the sub-process. If the sub-process has nested activities, compensation is thrown recursively. However, compensation is not propagated to the "upper levels" of the process: if compensation is triggered within a sub-process, it is not propagated to activities outside of the sub-process scope. The BPMN specification states that compensation is triggered for activities at "the same level of sub-process".

In Flowable, compensation is performed in reverse order of execution. This means that whichever activity completed last is compensated first, and so on.

The intermediate throwing compensation event can be used to compensate transaction sub-processes that competed successfully.

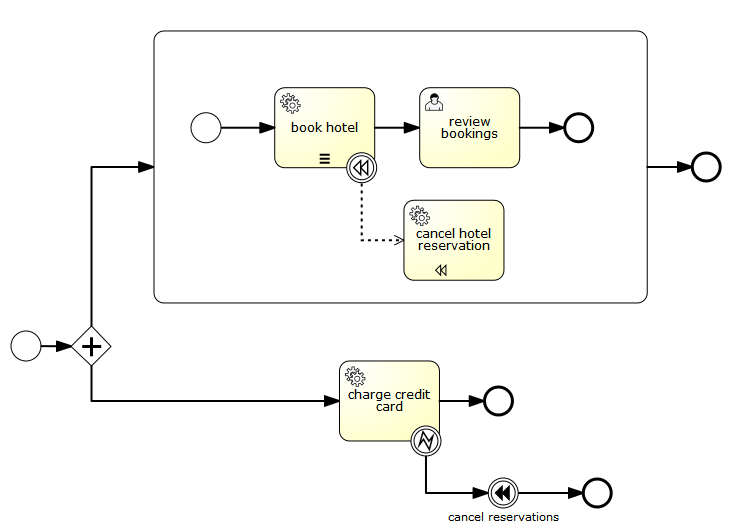

Note: If compensation is thrown within a scope that contains a sub-process, and the sub-process contains activities with compensation handlers, compensation is only propagated to the sub-process if it has completed successfully when compensation is thrown. If some of the activities nested inside the sub-process have completed and have attached compensation handlers, the compensation handlers are not executed if the sub-process containing these activities is not completed yet. Consider the following example:

In this process we have two concurrent executions: one executing the embedded sub-process and one executing the "charge credit card" activity. Let’s assume both executions are started and the first concurrent execution is waiting for a user to complete the "review bookings" task. The second execution performs the "charge credit card" activity and an error is thrown, which causes the "cancel reservations" event to trigger compensation. At this point the parallel sub-process is not yet completed which means that the compensation event is not propagated to the sub-process and consequently the "cancel hotel reservation" compensation handler is not executed. If the user task (and therefore the embedded sub-process) completes before the "cancel reservations" is performed, compensation is propagated to the embedded sub-process.

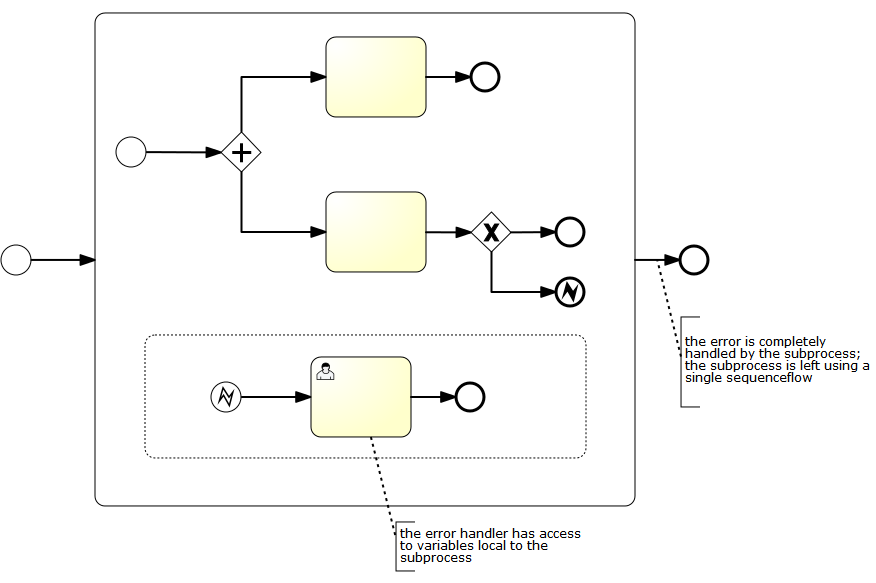

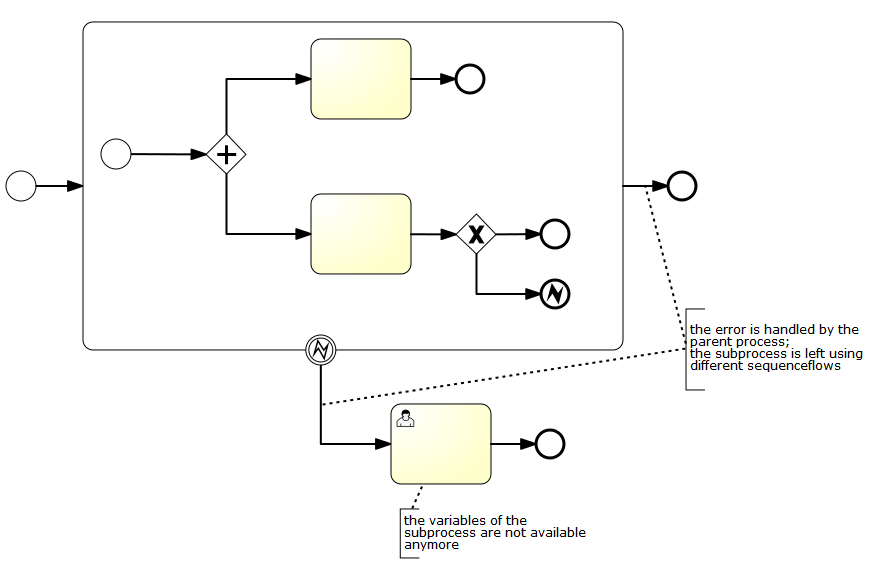

Process variables: When compensating an embedded sub-process, the execution used for executing the compensation handlers has access to the local process variables of the sub-process in the state they were in when the sub-process completed execution. To achieve this, a snapshot of the process variables associated with the scope execution (execution created for executing the sub-process) is taken. From this, a couple of implications follow:

The compensation handler does not have access to variables added to concurrent executions created inside the sub-process scope.

Process variables associated with executions higher up in the hierarchy (for instance, process variables associated with the process instance execution) are not contained in the snapshot: the compensation handler has access to these process variables in the state they are in when compensation is thrown.

A variable snapshot is only taken for embedded sub-processes, not for other activities.

Current limitations:

waitForCompletion="false" is currently unsupported. When compensation is triggered using the intermediate throwing compensation event, the event is only left after compensation completed successfully.

Compensation itself is currently performed by concurrent executions. The concurrent executions are started in reverse order to which the compensated activities completed.

Compensation is not propagated to sub-process instances spawned by call activities.

Graphical notation

An intermediate compensation throw event is visualized as a typical intermediate event (circle with smaller circle inside), with the compensation icon inside. The compensation icon is black (filled), to indicate its throw semantics.

XML representation

A compensation intermediate event is defined as an intermediate throwing event. The specific type sub-element is in this case a compensateEventDefinition element.

<intermediateThrowEvent id="throwCompensation">

<compensateEventDefinition />

</intermediateThrowEvent>

In addition, the optional argument activityRef can be used to trigger compensation of a specific scope or activity:

<intermediateThrowEvent id="throwCompensation">

<compensateEventDefinition activityRef="bookHotel" />

</intermediateThrowEvent>

Sequence Flow

Description

A sequence flow is the connector between two elements of a process. After an element is visited during process execution, all outgoing sequence flows will be followed. This means that the default nature of BPMN 2.0 is to be parallel: two outgoing sequence flows will create two separate, parallel paths of execution.

Graphical notation

A sequence flow is visualized as an arrow going from the source element towards the target element. The arrow always points towards the target.

XML representation

Sequence flows need to have a process-unique id and references to an existing source and target element.

<sequenceFlow id="flow1" sourceRef="theStart" targetRef="theTask" />

Conditional sequence flow

Description

A sequence flow can have a condition defined on it. When a BPMN 2.0 activity is left, the default behavior is to evaluate the conditions on the outgoing sequence flows. When a condition evaluates to true, that outgoing sequence flow is selected. When multiple sequence flows are selected that way, multiple executions will be generated and the process will be continued in a parallel way.

Note: the above holds for BPMN 2.0 activities (and events), but not for gateways. Gateways will handle sequence flows with conditions in specific ways, depending on the gateway type.

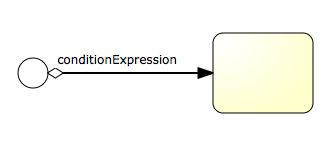

Graphical notation

A conditional sequence flow is visualized as a regular sequence flow, with a small diamond at the beginning. The condition expression is shown next to the sequence flow.

XML representation

A conditional sequence flow is represented in XML as a regular sequence flow, containing a conditionExpression sub-element. Note that currently only tFormalExpressions are supported, Omitting the xsi:type="" definition will simply default to the only supported type of expressions.

<sequenceFlow id="flow" sourceRef="theStart" targetRef="theTask">

<conditionExpression xsi:type="tFormalExpression">

<![CDATA[${order.price > 100 && order.price < 250}]]>

</conditionExpression>

</sequenceFlow>

Currently, conditionalExpressions can only be used with UEL. Detailed information about these can be found in the section on Expressions. The expression used should resolve to a boolean value, otherwise an exception is thrown while evaluating the condition.

- The example below references the data of a process variable, in the typical JavaBean style through getters.

<conditionExpression xsi:type="tFormalExpression">

<![CDATA[${order.price > 100 && order.price < 250}]]>

</conditionExpression>

- This example invokes a method that resolves to a boolean value.

<conditionExpression xsi:type="tFormalExpression">

<![CDATA[${order.isStandardOrder()}]]>

</conditionExpression>

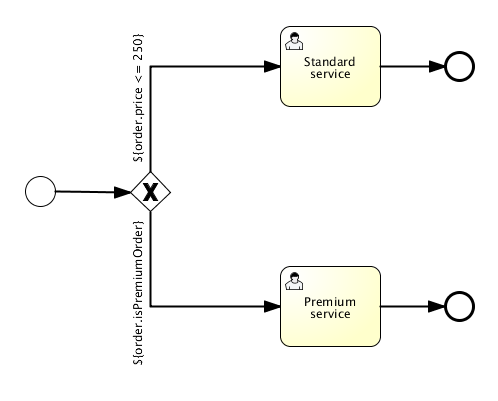

The Flowable distribution contains the following example process using value and method expressions (see org.flowable.examples.bpmn.expression):

Default sequence flow

Description

All BPMN 2.0 tasks and gateways can have a default sequence flow. This sequence flow is only selected as the outgoing sequence flow for that activity if and only if none of the other sequence flows could be selected. Conditions on a default sequence flow are always ignored.

Graphical notation

A default sequence flow is visualized as a regular sequence flow, with a 'slash' marker at the beginning.

XML representation

A default sequence flow for a certain activity is defined by the default attribute on that activity. The following XML snippet shows an example of an exclusive gateway that has as default sequence flow, flow 2. Only when conditionA and conditionB both evaluate to false, will it be chosen as the outgoing sequence flow for the gateway.

<exclusiveGateway id="exclusiveGw" name="Exclusive Gateway" default="flow2" />

<sequenceFlow id="flow1" sourceRef="exclusiveGw" targetRef="task1">

<conditionExpression xsi:type="tFormalExpression">${conditionA}</conditionExpression>

</sequenceFlow>

<sequenceFlow id="flow2" sourceRef="exclusiveGw" targetRef="task2"/>

<sequenceFlow id="flow3" sourceRef="exclusiveGw" targetRef="task3">

<conditionExpression xsi:type="tFormalExpression">${conditionB}</conditionExpression>

</sequenceFlow>

Which corresponds with the following graphical representation:

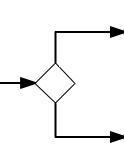

Gateways

A gateway is used to control the flow of execution (or as the BPMN 2.0 describes, the tokens of execution). A gateway is capable of consuming or generating tokens.

A gateway is graphically visualized as a diamond shape, with an icon inside. The icon shows the type of gateway.

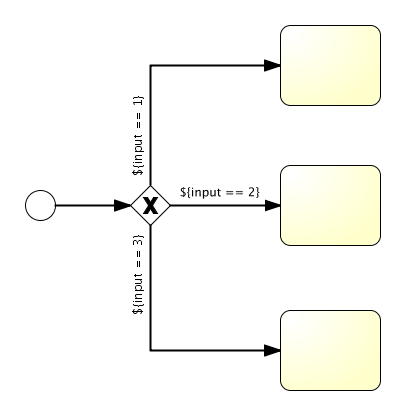

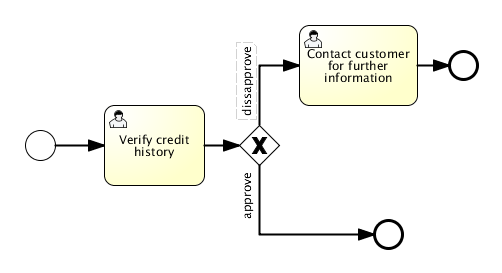

Exclusive Gateway

Description

An exclusive gateway (also called the XOR gateway or more technical the exclusive data-based gateway), is used to model a decision in the process. When the execution arrives at this gateway, all outgoing sequence flows are evaluated in the order in which they are defined. The first sequence flow whose condition evaluates to true (or doesn’t have a condition set, conceptually having a 'true' defined on the sequence flow) is selected for continuing the process.

Note that the semantics of the outgoing sequence flow is different in this case to that of the general case in BPMN 2.0. While, in general, all sequence flows whose condition evaluates to true are selected to continue in a parallel way, only one sequence flow is selected when using the exclusive gateway. If multiple sequence flows have a condition that evaluates to true, the first one defined in the XML (and only that one!) is selected for continuing the process. If no sequence flow can be selected, an exception will be thrown.

Graphical notation

An exclusive gateway is visualized as a typical gateway (a diamond shape) with an 'X' icon inside, referring to the XOR semantics. Note that a gateway without an icon inside defaults to an exclusive gateway. The BPMN 2.0 specification does not permit use of both the diamond with and without an X in the same process definition.

XML representation

The XML representation of an exclusive gateway is straight-forward: one line defining the gateway and condition expressions defined on the outgoing sequence flows. See the section on conditional sequence flow to see which options are available for such expressions.

Take, for example, the following model:

Which is represented in XML as follows:

<exclusiveGateway id="exclusiveGw" name="Exclusive Gateway" />

<sequenceFlow id="flow2" sourceRef="exclusiveGw" targetRef="theTask1">

<conditionExpression xsi:type="tFormalExpression">${input == 1}</conditionExpression>

</sequenceFlow>

<sequenceFlow id="flow3" sourceRef="exclusiveGw" targetRef="theTask2">

<conditionExpression xsi:type="tFormalExpression">${input == 2}</conditionExpression>

</sequenceFlow>

<sequenceFlow id="flow4" sourceRef="exclusiveGw" targetRef="theTask3">

<conditionExpression xsi:type="tFormalExpression">${input == 3}</conditionExpression>

</sequenceFlow>

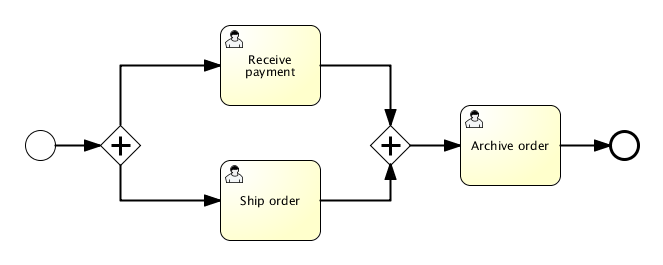

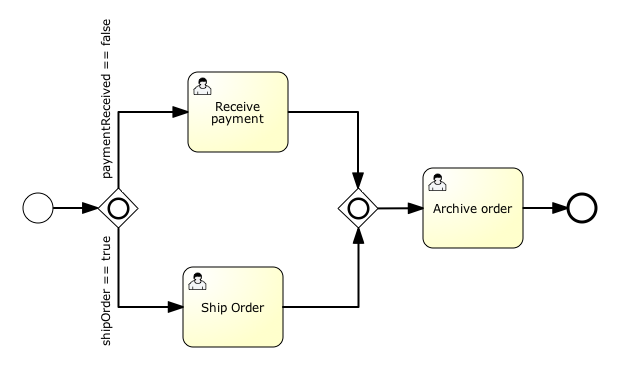

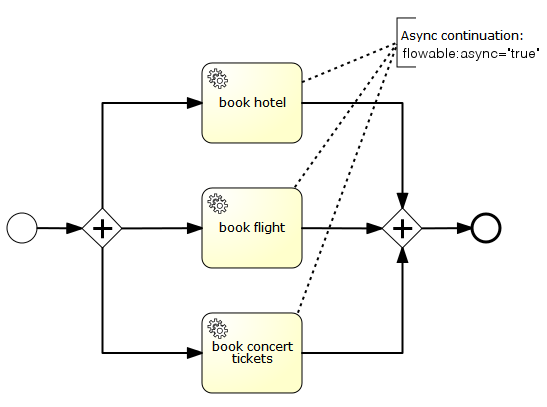

Parallel Gateway

Description

Gateways can also be used to model concurrency in a process. The most straightforward gateway to introduce concurrency in a process model, is the Parallel Gateway, which allows you to fork into multiple paths of execution or join multiple incoming paths of execution.

The functionality of the parallel gateway is based on the incoming and outgoing sequence flow:

fork: all outgoing sequence flows are followed in parallel, creating one concurrent execution for each sequence flow.

join: all concurrent executions arriving at the parallel gateway wait in the gateway until an execution has arrived for each of the incoming sequence flows. Then the process continues past the joining gateway.

Note that a parallel gateway can have both fork and join behavior, if there are multiple incoming and outgoing sequence flows for the same parallel gateway. In this case, the gateway will first join all incoming sequence flows before splitting into multiple concurrent paths of executions.

An important difference with other gateway types is that the parallel gateway does not evaluate conditions. If conditions are defined on the sequence flows connected with the parallel gateway, they are simply ignored.

Graphical Notation

A parallel gateway is visualized as a gateway (diamond shape) with the 'plus' symbol inside, referring to the 'AND' semantics.

XML representation

Defining a parallel gateway needs one line of XML:

<parallelGateway id="myParallelGateway" />

The actual behavior (fork, join or both), is defined by the sequence flow connected to the parallel gateway.

For example, the model above comes down to the following XML:

<startEvent id="theStart" />

<sequenceFlow id="flow1" sourceRef="theStart" targetRef="fork" />

<parallelGateway id="fork" />

<sequenceFlow sourceRef="fork" targetRef="receivePayment" />

<sequenceFlow sourceRef="fork" targetRef="shipOrder" />

<userTask id="receivePayment" name="Receive Payment" />

<sequenceFlow sourceRef="receivePayment" targetRef="join" />

<userTask id="shipOrder" name="Ship Order" />

<sequenceFlow sourceRef="shipOrder" targetRef="join" />

<parallelGateway id="join" />

<sequenceFlow sourceRef="join" targetRef="archiveOrder" />

<userTask id="archiveOrder" name="Archive Order" />

<sequenceFlow sourceRef="archiveOrder" targetRef="theEnd" />

<endEvent id="theEnd" />

In the example above, after the process is started, two tasks will be created:

ProcessInstance pi = runtimeService.startProcessInstanceByKey("forkJoin");

TaskQuery query = taskService.createTaskQuery()

.processInstanceId(pi.getId())

.orderByTaskName()

.asc();

List<Task> tasks = query.list();

assertEquals(2, tasks.size());

Task task1 = tasks.get(0);

assertEquals("Receive Payment", task1.getName());

Task task2 = tasks.get(1);

assertEquals("Ship Order", task2.getName());

When these two tasks are completed, the second parallel gateway will join the two executions and since there is only one outgoing sequence flow, no concurrent paths of execution will be created, and only the Archive Order task will be active.

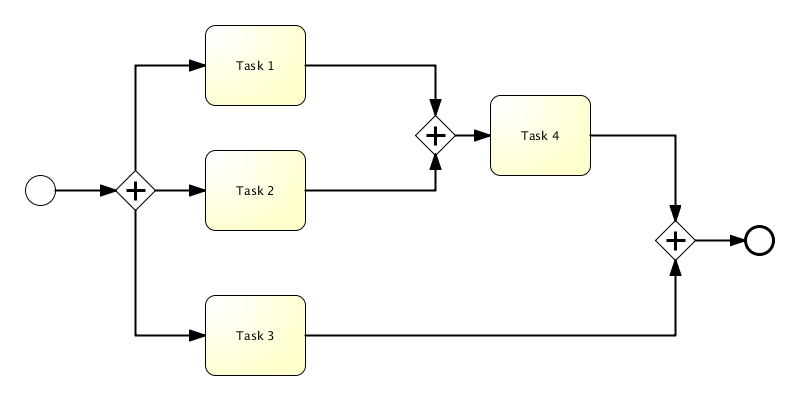

Note that a parallel gateway does not need to be 'balanced' (a matching number of incoming/outgoing sequence flow for corresponding parallel gateways). A parallel gateway will simply wait for all incoming sequence flows and create a concurrent path of execution for each outgoing sequence flow, not influenced by other constructs in the process model. So, the following process is legal in BPMN 2.0:

Inclusive Gateway

Description

The Inclusive Gateway can be seen as a combination of an exclusive and a parallel gateway. Like an exclusive gateway you can define conditions on outgoing sequence flows and the inclusive gateway will evaluate them. But the main difference is that the inclusive gateway can take more than one sequence flow, like the parallel gateway.

The functionality of the inclusive gateway is based on the incoming and outgoing sequence flows:

fork: all outgoing sequence flow conditions are evaluated and for the sequence flow conditions that evaluate to true the flows are followed in parallel, creating one concurrent execution for each sequence flow.

join: all concurrent executions arriving at the inclusive gateway wait at the gateway until an execution has arrived for each of the incoming sequence flows that have a process token. This is an important difference with the parallel gateway. So, in other words, the inclusive gateway will only wait for the incoming sequence flows that will be executed. After the join, the process continues past the joining inclusive gateway.

Note that an inclusive gateway can have both fork and join behavior, if there are multiple incoming and outgoing sequence flows for the same inclusive gateway. In this case, the gateway will first join all incoming sequence flows that have a process token, before splitting into multiple concurrent paths of executions for the outgoing sequence flows that have a condition that evaluates to true.

Graphical Notation

An inclusive gateway is visualized as a gateway (diamond shape) with the 'circle' symbol inside.

XML representation

Defining an inclusive gateway needs one line of XML:

<inclusiveGateway id="myInclusiveGateway" />

The actual behavior (fork, join or both), is defined by the sequence flows connected to the inclusive gateway.

For example, the model above comes down to the following XML:

<startEvent id="theStart" />

<sequenceFlow id="flow1" sourceRef="theStart" targetRef="fork" />

<inclusiveGateway id="fork" />

<sequenceFlow sourceRef="fork" targetRef="receivePayment" >

<conditionExpression xsi:type="tFormalExpression">${paymentReceived == false}</conditionExpression>

</sequenceFlow>

<sequenceFlow sourceRef="fork" targetRef="shipOrder" >

<conditionExpression xsi:type="tFormalExpression">${shipOrder == true}</conditionExpression>

</sequenceFlow>

<userTask id="receivePayment" name="Receive Payment" />

<sequenceFlow sourceRef="receivePayment" targetRef="join" />

<userTask id="shipOrder" name="Ship Order" />

<sequenceFlow sourceRef="shipOrder" targetRef="join" />

<inclusiveGateway id="join" />

<sequenceFlow sourceRef="join" targetRef="archiveOrder" />

<userTask id="archiveOrder" name="Archive Order" />

<sequenceFlow sourceRef="archiveOrder" targetRef="theEnd" />

<endEvent id="theEnd" />

In the example above, after the process is started, two tasks will be created if the process variables paymentReceived == false and shipOrder == true. If only one of these process variables equals true, only one task will be created. If no condition evaluates to true an exception is thrown. This can be prevented by specifying a default outgoing sequence flow. In the following example one task will be created, the ship order task:

HashMap<String, Object> variableMap = new HashMap<String, Object>();

variableMap.put("receivedPayment", true);

variableMap.put("shipOrder", true);

ProcessInstance pi = runtimeService.startProcessInstanceByKey("forkJoin");

TaskQuery query = taskService.createTaskQuery()

.processInstanceId(pi.getId())

.orderByTaskName()

.asc();

List<Task> tasks = query.list();

assertEquals(1, tasks.size());

Task task = tasks.get(0);

assertEquals("Ship Order", task.getName());

When this task is completed, the second inclusive gateway will join the two executions and as there is only one outgoing sequence flow, no concurrent paths of execution will be created, and only the Archive Order task will be active.

Note that an inclusive gateway does not need to be 'balanced' (a matching number of incoming/outgoing sequence flow for corresponding inclusive gateways). An inclusive gateway will simply wait for all incoming sequence flow and create a concurrent path of execution for each outgoing sequence flow, not influenced by other constructs in the process model.

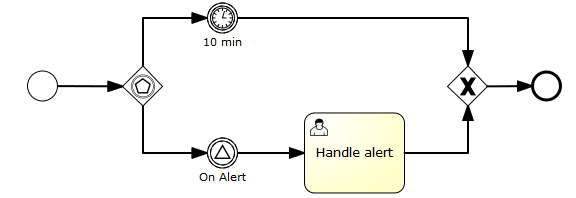

Event-based Gateway

Description

The Event-based Gateway provides a way to take a decision based on events. Each outgoing sequence flow of the gateway needs to be connected to an intermediate catching event. When process execution reaches an Event-based Gateway, the gateway acts like a wait state: execution is suspended. In addition, for each outgoing sequence flow, an event subscription is created.

Note the sequence flows running out of an Event-based Gateway are different from ordinary sequence flows. These sequence flows are never actually "executed". On the contrary, they allow the process engine to determine which events an execution arriving at an Event-based Gateway needs to subscribe to. The following restrictions apply:

An Event-based Gateway must have two or more outgoing sequence flows.

An Event-based Gateway must only be connected to elements of type intermediateCatchEvent (Receive Tasks after an Event-based Gateway are not supported by Flowable).

An intermediateCatchEvent connected to an Event-based Gateway must have a single incoming sequence flow.

Graphical notation

An Event-based Gateway is visualized as a diamond shape like other BPMN gateways with a special icon inside.

XML representation

The XML element used to define an Event-based Gateway is eventBasedGateway.

Example(s)

The following process is an example of a process with an Event-based Gateway. When the execution arrives at the Event-based Gateway, process execution is suspended. In addition, the process instance subscribes to the alert signal event and creates a timer that fires after 10 minutes. This effectively causes the process engine to wait for ten minutes for a signal event. If the signal occurs within 10 minutes, the timer is cancelled and execution continues after the signal. If the signal is not fired, execution continues after the timer and the signal subscription is canceled.

<definitions id="definitions"

xmlns="http://www.omg.org/spec/BPMN/20100524/MODEL"

xmlns:flowable="http://flowable.org/bpmn"

targetNamespace="Examples">

<signal id="alertSignal" name="alert" />

<process id="catchSignal">

<startEvent id="start" />

<sequenceFlow sourceRef="start" targetRef="gw1" />

<eventBasedGateway id="gw1" />

<sequenceFlow sourceRef="gw1" targetRef="signalEvent" />

<sequenceFlow sourceRef="gw1" targetRef="timerEvent" />

<intermediateCatchEvent id="signalEvent" name="Alert">

<signalEventDefinition signalRef="alertSignal" />

</intermediateCatchEvent>

<intermediateCatchEvent id="timerEvent" name="Alert">

<timerEventDefinition>

<timeDuration>PT10M</timeDuration>

</timerEventDefinition>

</intermediateCatchEvent>

<sequenceFlow sourceRef="timerEvent" targetRef="exGw1" />

<sequenceFlow sourceRef="signalEvent" targetRef="task" />

<userTask id="task" name="Handle alert"/>

<exclusiveGateway id="exGw1" />

<sequenceFlow sourceRef="task" targetRef="exGw1" />

<sequenceFlow sourceRef="exGw1" targetRef="end" />

<endEvent id="end" />

</process>

</definitions>

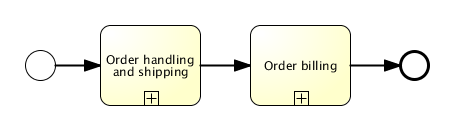

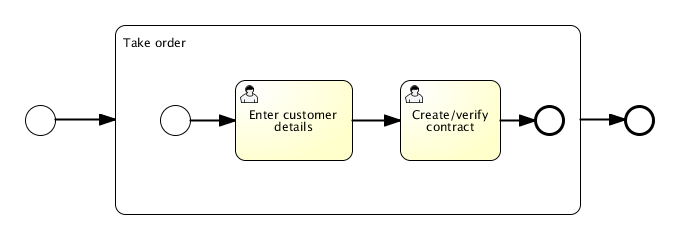

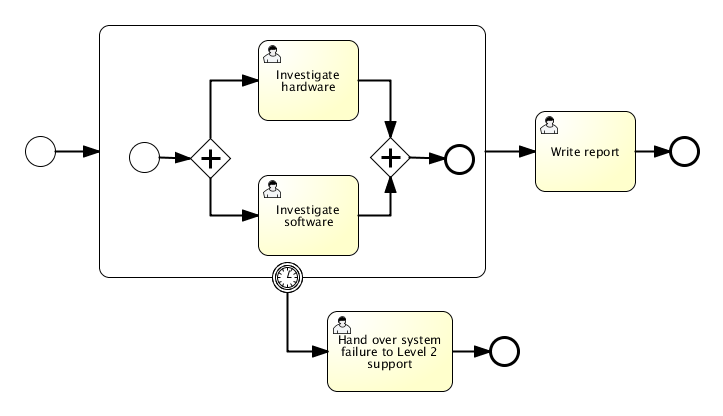

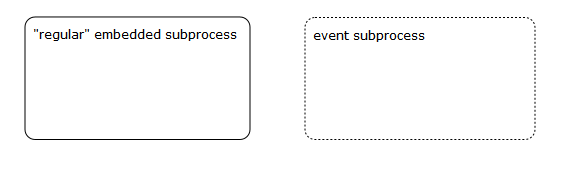

Tasks

User Task

Description

A 'user task' is used to model work that needs to be done by a human. When the process execution arrives at such a user task, a new task is created in the task list of any users or groups assigned to that task.

Graphical notation

A user task is visualized as a typical task (rounded rectangle), with a small user icon in the left upper corner.

XML representation

A user task is defined in XML as follows. The id attribute is required, the name attribute is optional.

<userTask id="theTask" name="Important task" />

A user task can also have a description. In fact, any BPMN 2.0 element can have a description. A description is defined by adding the documentation element.

<userTask id="theTask" name="Schedule meeting" >

<documentation>

Schedule an engineering meeting for next week with the new hire.

</documentation>

The description text can be retrieved from the task in the standard Java way:

task.getDescription()

Due Date

Each task has a field indicating the due date of that task. The Query API can be used to query for tasks that are due on, before or after a given date.

There is an activity extension that allows you to specify an expression in your task-definition to set the initial due date of a task when it is created. The expression should always resolve to a java.util.Date, java.util.String (ISO8601 formatted), ISO8601 time-duration (for example, PT50M) or null. For example, you could use a date that was entered in a previous form in the process or calculated in a previous Service Task. If a time-duration is used, the due-date is calculated based on the current time and incremented by the given period. For example, when "PT30M" is used as dueDate, the task is due in thirty minutes from now.

<userTask id="theTask" name="Important task" flowable:dueDate="${dateVariable}"/>

The due date of a task can also be altered using the TaskService or in TaskListeners using the passed DelegateTask.

User assignment

A user task can be directly assigned to a user. This is done by defining a humanPerformer sub element. Such a humanPerformer definition needs a resourceAssignmentExpression that actually defines the user. Currently, only formalExpressions are supported.

<process >

...

<userTask id='theTask' name='important task' >

<humanPerformer>

<resourceAssignmentExpression>

<formalExpression>kermit</formalExpression>

</resourceAssignmentExpression>

</humanPerformer>

</userTask>

Only one user can be assigned as the human performer for the task. In Flowable terminology, this user is called the assignee. Tasks that have an assignee are not visible in the task lists of other people and can be found in the personal task list of the assignee instead.

Tasks directly assigned to users can be retrieved through the TaskService as follows:

List<Task> tasks = taskService.createTaskQuery().taskAssignee("kermit").list();

Tasks can also be put in the candidate task list of people. In this case, the potentialOwner construct must be used. The usage is similar to the humanPerformer construct. Do note that it is necessary to specify if it is a user or a group defined for each element in the formal expression (the engine cannot guess this).

<process >

...

<userTask id='theTask' name='important task' >

<potentialOwner>

<resourceAssignmentExpression>

<formalExpression>user(kermit), group(management)</formalExpression>

</resourceAssignmentExpression>

</potentialOwner>

</userTask>

Tasks defined with the potential owner construct can be retrieved as follows (or a similar TaskQuery usage as for the tasks with an assignee):

List<Task> tasks = taskService.createTaskQuery().taskCandidateUser("kermit");